Distributed UIMA Cluster Computing

Written and maintained by the Apache

UIMATMDevelopment Community

Version 3.0.0

Copyright © 2012 The Apache Software Foundation

Copyright © 2012 International Business Machines Corporation

License and Disclaimer

The ASF licenses this documentation to you under the Apache License, Version 2.0 (the ”License”); you may not

use this documentation except in compliance with the License. You may obtain a copy of the License

at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, this documentation and its contents are distributed under

the License on an ”AS IS” BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express

or implied. See the License for the specific language governing permissions and limitations under the

License.

Trademarks

All terms mentioned in the text that are known to be trademarks or service marks have been appropriately capitalized.

Use of such terms in this book should not be regarded as affecting the validity of the the trademark or service

mark.

Publication date: April 2019

Table of Contents

List of Figures

Part I

DUCC Concepts

Chapter 1

DUCC Overview

1.1 What is DUCC?

DUCC stands for Distributed UIMA Cluster Computing. DUCC is a cluster management system providing

tooling, management, and scheduling facilities to automate the scale-out of applications written to the UIMA

framework.

Core UIMA provides a generalized framework for applications that process unstructured information such as human

language, but does not provide a scale-out mechanism. UIMA-AS provides a scale-out mechanism to distribute UIMA

pipelines over a cluster of computing resources, but does not provide job or cluster management of the resources.

DUCC defines a formal job model that closely maps to a standard UIMA pipeline. Around this job model

DUCC provides cluster management services to automate the scale-out of UIMA pipelines over computing

clusters.

As of DUCC version 3.0.0 both UIMAv2 and UIMAv3 applications are supported. Although DUCC distributes a UIMA-AS

runtime, it only uses UIMA classes specified by the user’s application classpath.

1.2 DUCC Job Model

The Job Model defines the steps necessary to scale-up a UIMA pipeline using DUCC. The goal of DUCC is to

scale-up any UIMA pipeline, including pipelines that must be deployed across multiple machines using shared

services.

The DUCC Job model consists of standard UIMA components: a Collection Reader (CR), a CAS Multiplier (CM),

application logic as implemented one or more Analysis Engines (AE), and a CAS Consumer (CC).

The Collection Reader builds input CASs and forwards them to the UIMA pipelines. In the DUCC model, the CR is run in a

process separate from the rest of the pipeline. In fact, in all but the smallest clusters it is run on a different physical machine

than the rest of the pipeline. To achieve scalability, the CR must create very small CASs that do not contain application

data, but which contain references to data; for instance, file names. Ideally, the CR should be runnable in a process

not much larger than the smallest Java virtual machine. Later sections demonstrate methods for achieving

this.

Each pipeline must contain at least one CAS Multiplier which receives the CASs from the CR. The CMs encapsulate the

knowledge of how to receive the data references in the small CASs received from the CRs and deliver the referenced data to

the application pipeline. DUCC packages the CM, AE(s), and CC into a single process, multiple instances of which are then

deployed over the cluster.

A DUCC job therefore consists of a small specification containing the following items:

- The name of a resource containing the CR descriptor.

- The name of a resource containing the CM descriptor.

- The name of a resource containing the AE descriptor.

- The name of a resource containing the CC descriptor.

- Other information required to parameterize the above and identify the job such as log directory, working

directory, desired scale-out, classpath, etc. These are described in detail in subsequent sections.

On job submission, DUCC creates a single process executing the CR and one or more processes containing the analysis

pipeline.

DUCC provides other facilities in support of scale-out:

- The ability to reserve all or part of a node in the cluster.

- Automated management of services required in support of jobs.

- The ability to schedule and execute arbitrary processes on nodes in the cluster.

- Debugging tools and support.

- A web server to display and manage work and cluster status.

- A CLI and a Java API to support the above.

1.3 DUCC From UIMA to Full Scale-out

In this section we demonstrate the progression of a simple UIMA pipeline to a fully scaled-out job running under

DUCC.

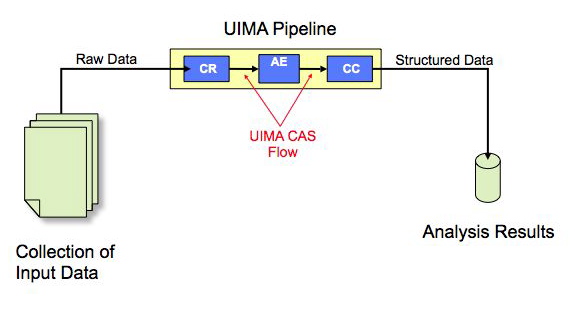

UIMA Pipelines

A normal UIMA pipeline contains a Collection Reader (CR), one or more Analysis Engines (AE) connected in a pipeline, and

a CAS Consumer (CC) as shown in Figure 1.1.

UIMA-AS Scaled Pipeline

With UIMA-AS the CR is separated into a discrete process and a CAS Multiplier (CM) is introduced into the pipeline as an

interface between the CR and the pipeline, as shown in Figure 1.2 below. Multiple pipelines are serviced by the CR and are

scaled-out over a computing cluster. The difficulty with this model is that each user is individually responsible for finding and

scheduling computing nodes, installing communication software such as ActiveMQ, and generally managing the distributed

job and associated hardware.

UIMA Pipeline Scaled By DUCC

DUCC is a UIMA and UIMA-AS-aware cluster manager. To scale out work under DUCC the developer tells DUCC what

the parts of the application are, and DUCC does the work to build the scale-out via UIMA/AS, to find and

schedule resources, to deploy the parts of the application over the cluster, and to manage the jobs while it

executes.

On job submission, the CR is wrapped with a DUCC main class and launched as a Job Driver (or JD). The DUCC main

class establishes communication with other DUCC components and instantiates the CR. If the CR initializes

successfully, and indicates that there are greater than 0 work items to process, the specified CM, AE and CC

components are assembled into an aggregate, wrapped with a DUCC main class, and launched as a Job Process (or

JP).

The JP will replicate the aggregate as many times as specified, each aggregate instance running in a single thread. When the

aggregate initializes, and whenever an aggregate thread needs work, the JP wrapper will fetch the next work item from the

JD, as shown in Figure 1.3 below.

UIMA Pipeline with User-Supplied DD Scaled By DUCC

Application programmers may supply their own Deployment Descriptors to control intra-process threading and scale-out. If a

DD is specified in the job parameters, DUCC will launch each JP with the specified UIMA-AS service instantiated in-process,

as depicted in Figure 1.4 below. In this case the user can still specify how many work items to deliver to the service

concurrently.

1.4 Error Management

DUCC provides a number of facilities to assist error management:

- DUCC captures exceptions in the JPs and delivers them to the Job Drivers. The JD wrappers implement logic

to enforce error thresholds, to identify and log errors, and to reflect job problems in the DUCC Web Server.

Error thresholds are configurable both globally and on a per-job basis.

- Error and timeout thresholds are implemented for both the initialization phase of a pipeline and the execution

phase.

- Retry-after-error is supported: if a process has a failure on some CAS after initialization is successful, the

process is terminated and all affected CASs are retried, up to some configurable threshold.

- To avoid disrupting existing workloads by a job that will fail to run, DUCC ensures that JD and JP processes

can successfully initialize before fully scaling out a job.

- Various error conditions encountered while a job is running will prevent a problematic job from continuing

scale out, and can result in termination of the job.

1.5 Cluster and Job Management

DUCC supports management of multiple jobs and multiple users in a distributed cluster:

-

Multiple User Support

- When properly configured, DUCC runs all work under the identity of the submitting

user. Logs are written with the user’s credentials into the user’s file space designated at job submission.

-

Fair-Share Scheduling

- DUCC provides a Fair-Share scheduler to equitably share resources among multiple users.

The scheduler also supports semi-permanent reservation of machines.

-

Service Management

- DUCC provides a Service Manager capable of automatically starting, stopping, and

otherwise managing and querying both UIMA-AS and non-UIMA-AS services in support of jobs.

-

Job Lifetime Management and Orchestration

- DUCC includes an Orchestrator to manage the lifetimes of all

entities in the system.

-

Node Sharing

- DUCC allocates processes for one or more users on a node, each with a specified amount of memory.

DUCC’s preferred mechanism for constraining memory use is Linux Control Groups, or CGroups. For nodes

that do not support CGroups, DUCC agents monitor RAM use and kill processes that exceed their share size

by a settable fudge factor.

-

DUCC Agents

- DUCC Agents manage each node’s local resources and all processes started by DUCC. Each node in a

cluster has exactly one Agent. The Agent

- Monitors and reports node capabilities (memory, etc) and performance data (CPU busy, swap, etc).

- Starts, stops, and monitors all processes on behalf of users.

- Patrols the node for “foreign” (non-DUCC) processes, reporting them to the Web Server, and optionally

reaping them.

- Ensures job processes do not exceed their declared memory requirements through the use of Linux

CGroups.

-

DUCC Web server

- DUCC provides a web server displaying all aspects of the system:

- All jobs in the system, their current state, resource usage, etc.

- All reserved resources and associated information (owner, etc.), including the ability to request and cancel

reservations.

- All services, including the ability to start, stop, and modify service definitions.

- All nodes in the system and their status, usage, etc.

- The status of all DUCC management processes.

- Access to documentation.

-

Cluster Management Support

- DUCC provides system management support to:

- Start, stop, and query full DUCC systems.

- Start, stop, and quiesce individual DUCC components.

- Add and delete nodes from the DUCC system.

- Discover DUCC processes (e.g. after partial failures).

- Find and kill errant job processes belonging to individual users.

- Monitor and display inter-DUCC messages.

1.6 Security Measures

The following DUCC security measures are provided:

-

user credentials

- DUCC instantiates user processes using a setuid root executable named ducc_ling. See more at

ducc_ling.

-

command line interface

- The CLI employs HTTP to send requests to the DUCC controller. The CLI creates and

employs public and private security keys in the user’s home directory for authentication of HTTP requests.

The controller validates requests via these same security keys.

-

webserver

- The webserver facilitates operational control and therefore authentication is desirable.

- Each user has the ability to control certain aspects of only his/her active submissions.

- Each administrator has the ability to control certain aspects of any user’s active submissions, as well as

modification of some DUCC operational characteristics.

A simple interface is provided so that an installation can plug-in a site specific authentication mechanism comprising

userid and password.

-

ActiveMQ

- DUCC uses ActiveMQ for administrative communication. AMQ authentication is used to prevent arbitrary

processes from participating. But when testing DUCC on a simulated cluster the AMQ broker runs without any access

restrictions so that it can be used as an application broker for UIMA-AS services used in simulation tests. See

start_sim.

1.6.1 ducc_ling

ducc_ling contains the following functions, which the security-conscious may verify by examining the source in

$DUCC_HOME/duccling. All sensitive operations are performed only AFTER switching userids, to prevent unauthorized

root access to the system.

- Changes it’s real and effective userid to that of the user invoking the job.

- Optionally redirects its stdout and stderr to the DUCC log for the current job.

- Optionally redirects its stdio to a port set by the CLI, when a job is submitted.

- “Nice”s itself to a “worse” priority than the default, to reduce the chances that a runaway DUCC job could

monopolize a system.

- Optionally sets user limits.

- Prints the effective limits for a job to both the user’s log, and the DUCC agent’s log.

- Changes to the user’s working directory, as specified by the job.

- Optionally establishes LD_LIBRARY_PATH for the job from the environment variable DUCC_LD_LIBRARY_PATH

if set in the DUCC job specification. (Secure Linux systems will prevent LD_LIBRARY_PATH from being set

by a program with root authority, so this is done AFTER changing userids).

- ONLY user ducc may use the ducc_ling program in a privileged way. Ducc_ling contains checks to prevent even

user root from using it for privileged operations.

1.7 Security Issues

The following DUCC security issues should be considered:

-

submit transmission ’sniffed’

- In the event that the DUCC submit command is ’sniffed’ then the user

authentication mechanism is compromised and user masquerading is possible. That is, the userid encryption

mechanism can be exploited such that user A can submit a job pretending to be user B.

-

user ducc password compromised

- In the event that the ducc user password is compromised then the root

privileged command ducc_ling can be used to become any other user except root.

-

user root password compromised

- In the event that the root user password is compromised DUCC provides no

protection. That is, compromising the root user is equivalent to compromising the DUCC user password.

Chapter 2

Glossary

-

Agent

- DUCC Agent processes run on every node in the system. The Agent receives orders to start and stop processes

on each node. Agents monitors nodes, sending heartbeat packets with node statistics to interested components

(such as the RM and web-server). If CGroups are installed in the cluster, the Agent is responsible for managing

the CGroups for each job process. All processes other than the DUCC management processes are are managed

as children of the agents.

-

Autostarted Service

- An autostarted service is a registered service that is started automatically by DUCC when

the DUCC system is booted.

-

Dependent Service or Job

- A dependent service or job is a service or job that specifies one or more service

dependencies in their job specification. The service or job is dependent upon the referenced service being

operational before being started by DUCC.

-

DUCC

- Distributed UIMA Cluster Computing.

-

DUCC-MON

- DUCC-MON is the DUCC web-server.

-

Job

- A DUCC job consists of the components required to deploy and execute a UIMA pipeline over a computing

cluster. It consists of a JD to run the Collection Reader, a set of JPs to run the UIMA AEs, and a Job

Specification to describe how the parts fit together.

-

Job Driver (JD)

- The Job Driver is a thin wrapper that encapsulates a Job’s Collection Reader. The JD executes

as a process that is scheduled and deployed by DUCC.

-

Job Process (JP)

- The Job Process is a thin wrapper that encapsulates a job’s pipeline components. The JP

executes in a process that is scheduled and deployed by DUCC.

-

Job Specification

- The Job Specification is a collection of properties that describe work to be scheduled and

deployed by DUCC. It identifies the UIMA components (CR, AE, etc) that comprise the job and the system-wide

properties of the job (CLASSPATHs, RAM requirements, etc).

-

Machine

- A physical computing resource managed by the DUCC Resource Manager.

-

Managed Reservation

- A DUCC managed reservation comprises an arbitrary process that is deployed on the

computing cluster within a share assigned by the DUCC scheduler.

-

Node

- See Machine.

-

Orchestrator (OR)

- The Orchestrator manages the life cycle of all entities within DUCC.

-

Process

- A process is one physical process executing on a machine in the DUCC cluster. DUCC jobs are comprised

of one or more processes (JDs and JPs). Each process is assigned one or more shares by the DUCC scheduler.

-

Process Manager (PM)

- The Process Manager coordinates distribution of work among the Agents.

-

Registered Service

- A registered service is a service that is registered with DUCC. DUCC saves the service

specification and fully manages the service, insuring it is running when needed, and shutdown when not.

-

Resource Manager (RM)

- The Resource Manager schedules physical resources for DUCC work.

-

Service Endpoint

- In DUCC, the service endpoint provides a unique identifier for a service. In the case of UIMA-AS

services, the endpoint also serves as a well-known address for contacting the service.

-

Service Instance

- A service instance is one physical process which runs a CUSTOM or UIMA-AS service. UIMA-AS

services are usually scaled-out with multiple instances implementing the same underlying service logic.

-

Service Manager (SM)

- The Service Manager manages the life-cycles of UIMA-AS and CUSTOM services. It

coordinates registration of services, starting and stopping of services, and ensures that services are available

and remain available for the lifetime of the jobs.

-

Share Quantum

- The DUCC scheduler abstracts the nodes in the cluster as a single large conglomerate of resources:

memory, processor cores, etc. The scheduler logically decomposes the collection of resources into some number

of equal-sized atomic units. Each unit of work requiring resources is apportioned one or more of these atomic

units. The smallest possible atomic unit is called the share quantum, or simply, share.

-

Weighted Fair Share

- A weighted fair share calculation is used to apportion resources equitably to the outstanding

work in the system. In a non-weighted fair-share system, all work requests are given equal consideration to all

resources. To provide some (“more important”) work more than equal resources, weights are used to bias the

allotment of shares in favor of some classes of work.

-

Work Items

- A DUCC work item is one unit of work to be completed in a single DUCC process. It is usually

initiated by the submission of a single CAS from the JD to one of the JPs. It could be thought of as a single

“question” to be answered by a UIMA analytic, or a single “task” to complete. Usually each DUCC JP executes

many work items per job.

-

$DUCC_HOME

- The root of the installed DUCC runtime, e.g. /home/ducc/ducc_runtime. It need not be set in

the environment, although the examples in this document assume that it has been.

Part II

Ducc Users Guide

Chapter 3

Command Line Interface

Overview

The DUCC CLI is the primary means of communication with DUCC. Work is submitted, work is canceled, work is

monitored, and work is queried with this interface.

All parameters may be passed to all the CLI commands in the form of Unix-like “long-form” (key, value) pairs, in which the

key is proceeded by the characters “--”. As well, the parameters may be saved in a standard Java Properties file, without

the leading “--” characters. Both a properties file and command-line parameters may be passed to each CLI.

When both are present, the parameters on the command line take precedence. Take, for example the following

simple job properties file, call it 1.job, where the environment variable “DH” has been set to the location of

$DUCC_HOME.

description Test job 1

classpath ${DH}/lib/uima-ducc/examples/*

environment AE_INIT_TIME=5 AE_INIT_RANGE=5 LD_LIBRARY_PATH=/a/nother/path

scheduling_class normal

driver_descriptor_CR org.apache.uima.ducc.test.randomsleep.FixedSleepCR

driver_descriptor_CR_overrides jobfile=${DH}/lib/examples/simple/1.inputs compression=10

error_rate=0.0

driver_jvm_args -Xmx500M

process_descriptor_AE org.apache.uima.ducc.test.randomsleep.FixedSleepAE

process_memory_size 4

process_jvm_args -Xmx100M

process_pipeline_count 2

process_per_item_time_max 5

process_deployments_max 999

This can be submitted, overriding the scheduling class and memory, thus:

ducc_submit --specification 1.job --process_memory_size 16 --scheduling_class high

The DUCC CLI parameters are now described in detail.

3.1 The DUCC Job Descriptor

The DUCC Job Descriptor includes properties to enable automated management and scale-out over large computing clusters.

The job descriptor includes

- References to the various UIMA components required by the job (CR, CM, AE, CC, and maybe DD)

- Scale-out requirements: number of processes, number of threads per process, etc

- Environment requirements: log directory, working directory, environment variables, etc,

- JVM parameters

- Scheduling class

- Error-handling preferences: acceptable failure counts, timeouts, etc

- Debugging and monitoring requirements and preferences

3.2 Operating System Limit Support

The CLI supports specification of operating system limits applied to the various job processes. To specify a limit, pass the

name of the limit and its value in the environment specified in the job. Limits are named with the string

“DUCC_RLIMIT_name” where “name” is the name of a specific limit. Supported limits include:

- DUCC_RLIMIT_CORE

- DUCC_RLIMIT_CPU

- DUCC_RLIMIT_DATA

- DUCC_RLIMIT_FSIZE

- DUCC_RLIMIT_MEMLOCK

- DUCC_RLIMIT_NOFILE

- DUCC_RLIMIT_NPROC

- DUCC_RLIMIT_RSS

- DUCC_RLIMIT_STACK

- DUCC_RLIMIT_AS

- DUCC_RLIMIT_LOCKS

- DUCC_RLIMIT_SIGPENDING

- DUCC_RLIMIT_MSGQUEUE

- DUCC_RLIMIT_NICE

- DUCC_RLIMIT_STACK

- DUCC_RLIMIT_RTPRIO

See the Linux documentation for details on the meanings of these limits and their values.

For example, to set the maximum number of open files allowed in any job process, specify an environment similar to this

when submitting the job:

ducc_submit .... --environment="DUCC_RLIMIT_NOFILE=1024" ...

3.3 Command Line Forms

The Command Line Interface is provided in several forms:

-

1.

- A wrapper script around the uima-ducc-cli.jar.

-

2.

- Direct invocation of each command’s class with the java command.

When using the scripts the full execution environment is established silently. When invoking a command’s class directly, the

java CLASSPATH must include the uima-ducc-cli.jar, as illustrated in the wrapper scripts.

3.4 DUCC Commands

The following commands are provided:

-

ducc_submit

- Submit a job for execution.

-

ducc_cancel

- Cancel a job in progress.

-

ducc_reserve

- Request a reservation of a machine.

-

ducc_unreserve

- Cancel a reservation.

-

ducc_monitor

- Monitor the progress of a job that is already submitted.

-

ducc_process_submit

- Submit an arbitrary process (managed reservation) for execution.

-

ducc_process_cancel

- Cancel an arbitrary process.

-

ducc_services

- Register, unregister, start, stop, modify, disable, enable, ignore references, observe references, and

query a service.

-

viaducc

- This is a script wrapper to facilitate execution of Eclipse workspaces as DUCC jobs as well as general

execution of arbitrary processes in DUCC-managed resources.

The next section describes these commands in detail.

3.5 ducc_submit

Description:

The submit CLI is used to submit work for execution by DUCC. DUCC assigns a unique id to the job and schedules it for

execution. The submitter may optionally request that the progress of the job is monitored, in which case the state of the job

as it progresses through its lifetime is printed on the console.

Usage:

-

Script wrapper

- $DUCC_HOME/bin/ducc_submit options

-

Java Main

- java -cp $DUCC_HOME/lib/uima-ducc-cli.jar org.apache.uima.ducc.cli.DuccJobSubmit options

Options:

-

--all_in_one <local | remote >

- Run driver and pipeline in single process. If local is specified, the process is

executed on the local machine, for example, in the current Eclipse session. If remote is specified, the jobs is

submitted to DUCC as a managed reservation and run on some (presumably larger) machine allocated by

DUCC.

-

--attach_console

- If specified, redirect remote stdout and stderr to the local submitting console.

-

--cancel_on_interrupt

- If specified, the job is monitored and will be canceled if the submit command is

interrupted, e.g. with CTRL-C. This option always implies --wait_for_completion.

-

--classpath [path-string]

- The CLASSPATH used for the job. If specified, this is used for both the Job Driver

and each Job Process. If not specified, the CLASSPATH of the process invoking this request is used.

-

--classpath_order [user-before-ducc | ducc-before-user]

- OBSOLETE - ignored.

-

--debug

- Enable debugging messages. This is primarily for debugging DUCC itself.

-

--description [text]

- The text is any string used to describe the job. It is displayed in the Web Server. When

specified on a command-line the text usually must be surrounded by quotes to protect it from the shell. The

default is “none”.

-

--driver_debug [debug-port]

- Append JVM debug flags to the JVM arguments to start the JobDriver in remote

debug mode. The remote process debugger will attempt to contact the specified port.

-

--driver_descriptor_CR [descriptor.xml]

- This is the XML descriptor for the Collection Reader. This

descriptor is a resource that is searched for in the filesystem or Java classpath as described in the notes below.

(Required)

-

--driver_descriptor_CR_overrides [list]

- This is the Job Driver collection reader configuration overrides. They are

specified as name/value pairs in a whitespace-delimited list. Example:

--driver_descriptor_CR_overrides name1=value1 name2=value2...

-

--driver_exception_handler [classname]

- This specifies a developer-supplied exception handler for the Job Driver. It

must implement org.apache.uima.ducc.IErrorHandler or extend org.apache.uima.ducc.ErrorHandler. A built-in default

exception handler is provided.

-

--driver_exception_handler_arguments [argument-string]

- This is a string containing arguments for the exception

handler. The contents of the string is entirely a function of the specified exception handler. If not specified, a null is

passed in.

The built-in default exception handler supports an argument string of the following form (with NO embedded

blanks):

max_job_errors=15 max_timeout_retrys_per_workitem=0

Note: When used as a CLI option, the string must usually be quoted to protect it from the shell, if it contains blanks.

The built-in default exception handler supports two arguments, whose default values are shown above. The

max_job_errors limit specifies the number of work item errors allowed before forcibly terminating the job. The

max_timeout_retrys_per_workitem limit specifies the number of times each work item is retried in the event of a

time-out.

-

--driver_jvm_args [list]

- This specifies extra JVM arguments to be provided to the Job Driver process. It is a

blank-delimited list of strings. Example:

--driver_jvm_args -Xmx100M -Xms50M

Note: When used as a CLI option, the list must usually be quoted to protect it from the shell.

-

--environment [env vars]

- Blank-delimited list of environment variables and variable assignments. Entries will be copied

from the user’s environment if just the variable name is specified, optionally with a final ’*’ for those with the same

prefix. If specified, this is used for all DUCC processes in the job. Example:

--environment TERM=xterm DISPLAY=:1.0 LANG UIMA_*

Additional entries may be copied from the user’s environment based on the setting of ducc.submit.environment.propagated in

the global DUCC configuration ducc.properties.

Note: When used as a CLI option, the environment string must usually be quoted to protect it from the

shell.

The following cause special runtime behavior. They are considered experimental and are not guaranteed to be effective

from release to release.

-

DUCC_USER_CP_PREPEND [path-to-ducc-jars-and-classes]

- If specified, this path is used to supply the DUCC classes required for running the Job Driver and Job

Process(es), normally set to $DUCC_HOME/lib/uima-ducc/users/*.

-

DUCC_WORK_ITEM_PERFORMANCE [true]

- If specified, DUCC will create subdirectory work-item-performance in the user specified log directory and

will place an individual json.gz file for each work item successfully processed comprising its performance

breakdown.

-

DUCC_KEEP_TEMPORARY_DESCRIPTORS [true]

- If specified, DUCC will not delete any temporary descriptors it creates (these are usually deleted when

the process ends.) DUCC may make a local file copy if the descriptor is in a jar, or if the filesystem is not

shared.

-

--help

- Prints the usage text to the console.

-

--jvm [path-to-java]

- States the JVM to use. If not specified, the same JVM used by the Agents is used. This is the full

path to the JVM, not the JAVA_HOME. Example:

--jvm /share/jdk1.6/bin/java

-

--log_directory [path-to-log-directory]

- This specifies the path to the directory for the user logs. If not fully specified

the path is made relative to the value of the --working_directory. If omitted, the default is $HOME/ducc/logs.

Example:

--log_directory /home/bob

Within this directory DUCC creates a sub-directory for each job, using the unique numerical ID of the job. The format

of the generated log file names as described here.

Note: The --log_directory specifies only the path to a directory where logs are to be stored. In order to manage

multiple processes running in multiple machines, sub-directory and file names are generated by DUCC and may not be

directly specified.

-

--process_debug [debug-port]

- Append JVM debug flags to the JVM arguments to start the Job Process in remote

debug mode. The remote process will start its debugger and attempt to contact the debugger (usually Eclipse) on the

specified port.

-

--process_deployments_max [integer]

- This specifies the maximum number of Job Processes to deploy at any given

time. If not specified, DUCC will attempt to provide the largest number of processes within the constraints of

fair_share scheduling and the amount of work remaining. in the job. Example:

--process_deployments_max 66

-

--process_descriptor_AE [descriptor]

- This specifies the Analysis Engine descriptor to be deployed in the Job

Processes. This descriptor is a resource that is searched for in the filesystem or Java classpath as described in the

notes below. It is mutually exclusive with --process_descriptor_DD. Example:

--process_descriptor_AE /home/billy/resource/AE_foo.xml

-

--process_descriptor_AE_overrides [list]

- This specifies AE overrides. It is a whitespace-delimited list of name/value

pairs. Example:

--process_descriptor_AE_Overrides name1=value1 name2=value2

-

--process_descriptor_CC [descriptor]

- This specifies the CAS Consumer descriptor to be deployed in the Job

Processes. This descriptor is a resource that is searched for in the filesystem or Java classpath as described in the

notes below. It is mutually exclusive with --process_descriptor_DD. Example:

--process_descriptor_CC /home/billy/resourceCCE_foo.xml

-

--process_descriptor_CC_overrides [list]

- This specifies CC overrides. It is a whitespace-delimited list of name/value

pairs. Example:

--process_descriptor_CC_overrides name1=value1 name2=value2

-

--process_descriptor_CM [descriptor]

- This specifies the CAS Multiplier descriptor to be deployed in the Job

Processes. This descriptor is a resource that is searched for in the filesystem or Java classpath as described in the

notes below. It is mutually exclusive with --process_descriptor_DD. Example:

--process_descriptor_CM /home/billy/resource/CM_foo.xml

-

--process_descriptor_CM_overrides [list]

- This specifies CM overrides. It is a whitespace-delimited list of name/value

pairs. Example:

--process_descriptor_CM_overrides name1=value1 name2=value2

-

--process_descriptor_DD [descriptor]

- This specifies a UIMA Deployment Descriptor for the job processes for

DD-style jobs. This is mutually exclusive with --process_descriptor_AE, --process_descriptor_CM, and

--process_descriptor_CC. This descriptor is a resource that is searched for in the filesystem or Java classpath as

described in the notes below. Example:

--process_descriptor_DD /home/billy/resource/DD_foo.xml

Alias: --process_DD

-

--process_failures_limit [integer]

- This specifies the maximum number of individual Job Process (JP) failures allowed

before killing the job. The default is twenty(20). If this limit is exceeded over the lifetime of a job DUCC terminates the

entire job. Example:

--process_failures_limit 23

-

--process_initialization_failures_cap [integer]

- This specifies the maximum number of failures during a

UIMA process’s initialization phase. If the number is exceeded the system will allow processes which are

already running to continue, but will assign no new processes to the job. The default is ninety-nine(99).

Example:

--process_initialization_failures_cap 62

Note that the job is NOT killed if there are processes that have passed initialization and are running. If this limit is

reached, the only action is to not start new processes for the job.

-

--process_initialization_time_max [integer]

- This is the maximum time in minutes that a process is allowed to remain

in the “initializing” state, before DUCC terminates it. The error counts as an initialization error towards the

initialization failure cap.

-

--process_jvm_args [list]

- This specifies additional arguments to be passed to all of the job processes as a

blank-delimited list of strings. Example:

--process_jvm_args -Xmx400M -Xms100M

Note: When used as a CLI option, the arguments must usually be quoted to protect them from the

shell.

-

--process_memory_size [size]

- This specifies the maximum amount of RAM in GB to be allocated to each Job Process.

This value is used by the Resource Manager to allocate resources.

-

--process_per_item_time_max [integer]

- This specifies the maximum time in minutes that the Job Driver will wait

for a Job Processes to process a CAS. If a timeout occurs the process is terminated and the CAS marked in error (not

retried). If not specified, the default is 24 hours. Example:

--process_per_item_time_max 60

-

--process_error_window_threshold [integer]

- This specifies an upper bound for number of process errors a JP can

tolerate. When this threshold is reached, the JP will terminate. If not specified, the default is 1 which means terminate

on first process error. Example:

--process_error_window_threshold 2

-

--process_error_window_size [integer]

- This specifies a window the JP error handler uses to determine if it should

terminate on process error. When a defined number of errors in a given window is reached, the JP will terminate. If not

specified, the default is 1 which means terminate on first process error. This property is used together with

--process_error_window_threshold. Example:

--process_error_window_size 10

-

--process_pipeline_count [integer]

- This specifies the number of pipelines per process to be deployed, i.e.

the number of work-items each JP will process simultaneously. It is used by the Resource Manager to

determine how many processes are needed, by the Job Process wrapper to determine how many threads to

spawn, and by the Job Driver to determine how many CASs to dispatch. If not specified, the default is 4.

Example:

--process_pipeline_count 7

Alias: --process_thread_count

-

--scheduling_class [classname]

- This specifies the name of the scheduling class the used to determine the resource

allocation for each process. The names of the classes are installation dependent. If not specified, the FAIR_SHARE

default is taken from the site class definitions file described here. Example:

--scheduling_class normal

-

--service_dependency[list]

- This specifies a blank-delimited list of services the job processes are dependent upon. Service

dependencies are discussed in detail here. Example:

--service_dependency UIMA-AS:Service1:tcp:host1:61616 UIMA-AS:Service2:tcp:host2:123

-

--specification, -f [file]

- All the parameters used to submit a job may be placed in a standard Java properties file. This

file may then be used to submit the job (rather than providing all the parameters directory to submit). The leading --

is omitted from the keywords.

For example,

ducc_submit --specification job.props

ducc_submit -f job.props

where job.props contains:

working_directory = /home/bob/projects/ducc/ducc_test/test/bin

process_failures_limit = 20

driver_descriptor_CR = org.apache.uima.ducc.test.randomsleep.FixedSleepCR

environment = AE_INIT_TIME=10000 UIMA LD_LIBRARY_PATH=/a/bogus/path

log_directory = /home/bob/ducc/logs/

process_pipeline_count = 1

driver_descriptor_CR_overrides = jobfile:../simple/jobs/1.job compression:10

process_initialization_failures_cap = 99

process_per_item_time_max = 60

driver_jvm_args = -Xmx500M

process_descriptor_AE = org.apache.uima.ducc.test.randomsleep.FixedSleepAE

classpath = /home/bob/duccapps/ducky_process.jar

description = ../simple/jobs/1.job[AE]

process_jvm_args = -Xmx100M -DdefaultBrokerURL=tcp://localhost:61616

scheduling_class = normal

process_memory_size = 15

Note that properties in a specification file may be overridden by other command-line parameters, as discussed

here.

-

--suppress_console_log

- If specified, suppress creation of the log files that normally hold the redirected stdout and

stderr.

-

--timestamp

- If specified, messages from the submit process are timestamped. This is intended primarily for use with a

monitor with –wait_for_completion.

-

--wait_for_completion

- If specified, the submit command monitors the job and prints periodic state and progress

information to the console. When the job completes, the monitor is terminated and the submit command returns. If the

command is interrupted, e.g. with CTRL-C, the job will not be canceled unless --cancel_on_interrupt is also

specified.

-

--working_directory

- This specifies the working directory to be set by the Job Driver and Job Process processes. If not

specified, the current directory is used.

Notes:

When searching for UIMA XML resource files such as descriptors, DUCC searches either the filesystem or Java classpath

according to the following rules:

-

1.

- If the resource ends in .xml it is assumed the resource is a file in the filesystem and the path is either an

absolute path or a path relative to the specified working directory. [by location]

-

2.

- If the resource does not end in .xml, it is assumed the resource is in the Java classpath. DUCC creates a

resource name by replacing the ”.” separators with ”/” and appending ”.xml”. [by name]

3.6 ducc_cancel

Description:

The cancel CLI is used to cancel a job that has previously been submitted but which has not yet completed.

Usage:

-

Script wrapper

- $DUCC_HOME/bin/ducc_cancel options

-

Java Main

- java -cp $DUCC_HOME/lib/uima-ducc-cli.jar org.apache.uima.ducc.cli.DuccJobCancel options

Options:

-

--debug

- Prints internal debugging information, intended for DUCC developers or extended problem

determination.

-

--id [jobid]

- The ID is the id of the job to cancel. (Required)

-

--reason [quoted string]

- Optional. This specifies the reason the job is canceled for display in the web server. Note that

the shell requires a quoted string. Example:

ducc_cancel --id 12 --reason "This is a pretty good reason."

-

--dpid [pid]

- If specified only this DUCC process will be canceled. If not specified, then entire job will be canceled. The pid

is the DUCC-assigned process ID of the process to cancel. This is the ID in the first column of the Web Server’s job

details page, under the column labeled “Id”.

-

--help

- Prints the usage text to the console.

-

--role_administrator

- The command is being issued in the role of a DUCC administrator. If the user is not also a

registered administrator this flag is ignored. (This helps to protect administrators from accidentally canceling jobs they

do not own.)

Notes:

None.

3.7 ducc_reserve

Description:

The reserve CLI is used to request a reservation of resources. Reservations can be for machines based on memory

requirements. All reservations are persistent: the resources remain dedicated to the requester until explicitly returned. All

reservations are performed on an ”all-or-nothing” basis: either the entire set of requested resources is reserved, or the

reservation request fails.

All forms of ducc_reserve block until the reservation is complete (or fails) at which point the DUCC ID of the reservation and

the names of the reserved nodes are printed to the console and the command returns.

Usage:

-

Script wrapper

- $DUCC_HOME/bin/ducc_reserve options

-

Java Main

- java -cp $DUCC_HOME/lib/uima-ducc-cli.jar org.apache.uima.ducc.cli.DuccReservationSubmit

options

Options:

-

--cancel_on_interrupt

- If specified, the request is monitored and will be canceled if the reserve command is

interrupted, e.g. with CTRL-C. This option always implies --wait_for_completion.

-

--debug

- Prints internal debugging information, intended for DUCC developers or extended problem

determination.

-

--description [text]

- The text is any string used to describe the reservation. It is displayed in the Web Server.

-

--help

- Prints the usage text to the console.

-

--memory_size [integer]

- This specifies the amount of memory the reserved machine must support. After

rounding up it must match the total usable memory on the machine. (Required)

Alias: --instance_memory_size

-

--scheduling_class [classname]

- This specifies the name of the scheduling class used to determine the resource

allocation for each process. It must be one implementing the RESERVE policy. If not specified, the RESERVE

default is taken from the site class definitions file described here.

-

-f, --specification [file]

- All the parameters used to request a reservation may be placed in a standard Java

properties file. This file may then be used to submit the request (rather than providing all the parameters

directory to submit).

-

--timestamp

- If specified, messages from the submit process are timestamped. This is intended primarily for use

with a monitor with –wait_for_completion.

-

--wait_for_completion

- By default, the reserve command monitors the request and prints periodic state and

progress information to the console. When the reservation completes, the monitor is terminated and the reserve

command returns. If the command is interrupted, e.g. with CTRL-C, the request will not be canceled unless

--cancel_on_interrupt is also specified. If this option is disabled by specifying a value of “false”, the command

returns as soon as the request has been submitted.

Notes:

Reservations must be for entire machines, in a job class implementing the RESERVE scheduling policy. The default DUCC

distribution configures class reserve for entire machine reservations. A reservation request will be queued if there is no

available machine in that class matching the requested size (after rounding up), or up to ducc.rm.reserve_overage larger than

the request (after rounding up). The user may cancel the request with ducc_unreserve or with CTRL-C if

--cancel_on_interrupt was specified.

3.8 ducc_unreserve

Description:

The unreserve CLI is used to release reserved resources.

Usage:

-

Script wrapper

- $DUCC_HOME/bin/ducc_unreserve options

-

Java Main

- java -cp $DUCC_HOME/lib/uima-ducc-cli.jar org.apache.uima.ducc.cli.DuccReservationCancel options

Options:

-

--debug

- Prints internal debugging information, intended for DUCC developers or extended problem

determination.

-

--id [jobid]

- The ID is the id of the reservation to cancel. (Required)

-

--help

- Prints the usage text to the console.

-

--role_administrator

- The command is being issued in the role of a DUCC administrator. If the user is not also a

registered administrator this flag is ignored. (This helps to protect administrators from inadvertently canceling

jobs they do not own.)

Notes:

None.

2y

3.9 ducc_process_submit

Description:

Use ducc_process_submit to submit a Managed Reservation, also known as an arbitrary process to DUCC. The intention of

this function is an alternative to utilities such as ssh, in order to allow the spawned processes to be fully managed by DUCC.

This allows the DUCC scheduler to allocate the necessary resources (and prevent over-allocation), and the DUCC run-time

environment to manage process lifetime.

If attach_console is specified, Stdin, Stderr, and Stdout of the remote process are redirected to the submitting

console. It is thus possible to run interactive sessions with remote processes where the resources are managed by

DUCC.

Usage:

-

Script wrapper

- $DUCC_HOME/bin/ducc_process_submit options

-

Java Main

- java -cp $DUCC_HOME/lib/uima-ducc-cli.jar

org.apache.uima.ducc.cli.DuccManagedReservationSubmit options

Options:

-

--attach_console

- If specified, remote process stdout and stderr are redirected to, and stdin redirected from, the

local submitting console.

-

--cancel_on_interrupt

- If specified, the remote process is monitored and will be canceled if the submit command

is interrupted, e.g. with CTRL-C. This option always implies --wait_for_completion.

-

--description [text]

- The text is any string used to describe the process. It is displayed in the Web Server. When

specified on a command-line the text usually must be surrounded by quotes to protect it from the shell.

-

--debug

- Prints internal debugging information, intended for DUCC developers or extended problem

determination.

-

--environment [env vars]

- Blank-delimited list of environment variables and variable assignments. Entries will be copied

from the user’s environment if just the variable name is specified, optionally with a final ’*’ for those with the same

prefix. If specified, this is used for all DUCC processes in the job. Example:

--environment TERM=xterm DISPLAY=:1.0 LANG UIMA_*

Additional entries may be copied from the user’s environment based on the setting of ducc.submit.environment.propagated

in the global DUCC configuration ducc.properties.

Note: When used as a CLI option, the environment string must usually be quoted to protect it from the

shell.

-

--help

- Prints the usage text to the console.

-

--log_directory [path-to-log directory]

-

This specifies the path to the directory for the user logs. If not specified, the default is $HOME/ducc/logs.

Example:

--log_directory /home/bob

Within this directory DUCC creates a sub-directory for each process, using the numerical ID of the job. The format of

the generated log file names as described here.

Note: Note that --log_directory specifies only the path to a directory where logs are to be stored. In order to manage

multiple processes running in multiple machines DUCC, sub-directory and file names are generated by DUCC and may

not be directly specified.

-

--process_executable [program name]

- This is the full path to a program to be executed. (Required)

-

--process_executable_args [argument list]

- This is a list of arguments for process_executable, if any.

When specified on a command-line the text usually must be surrounded by quotes to protect it from the

shell.

-

--process_memory_size [size]

- This specifies the maximum amount of RAM in GB to be allocated to each process. This

value is used by the Resource Manager to allocate resources. if this amount is exceeded by a process the Agent

terminates the process with a ShareSizeExceeded message.

-

--scheduling_class [classname]

- This specifies the name of the scheduling class the RM will use to determine the

resource allocation for each process. The names of the classes are installation dependent. If not specified, the

FIXED_SHARE default is taken from the site class definitions file described here.

-

--specification, -f [file]

- All the parameters used to submit a process may be placed in a standard Java properties file.

This file may then be used to submit the process (rather than providing all the parameters directory to

submit).

For example,

ducc_process_submit --specification job.props

ducc_process_submit -f job.props

where job.props contains:

working_directory = /home/bob/projects

environment = AE_INIT_TIME=10000 LD_LIBRARY_PATH=/a/bogus/path

log_directory = /home/bob/ducc/logs/

description = Simple Process

scheduling_class = fixed

process_memory_size = 15

-

--suppress_console_log

- If specified, suppress creation of the log files that normally hold the redirected stdout and

stderr.

-

--timestamp

- If specified, messages from the submit process are timestamped. This is intended primarily for use with a

monitor with –wait_for_completion.

-

--wait_for_completion

- If specified, the submit command monitors the remote process and prints periodic state and

progress information to the console. When the process completes, the monitor is terminated and the submit

command returns. If the command is interrupted, e.g. with CTRL-C, the request will not be canceled unless

--cancel_on_interrupt is also specified.

-

--working_directory

- This specifies the working directory to be set by the Job Driver and Job Process processes. If not

specified, the current directory is used.

Notes:

3.10 ducc_process_cancel

Description:

The cancel CLI is used to cancel a process that has previously been submitted but which has not yet completed.

Usage:

-

Script wrapper

- $DUCC_HOME/bin/ducc_process_cancel options

-

Java Main

- java -cp $DUCC_HOME/lib/uima-ducc-cli.jar

org.apache.uima.ducc.cli.DuccManagedReservationCancel options

Options:

-

--debug

- Prints internal debugging information, intended for DUCC developers or extended problem

determination.

-

--id [jobid]

- The DUCC ID is the id of the process to cancel. (Required)

-

--help

- Prints the usage text to the console.

-

--reason [quoted string]

- Optional. This specifies the reason the process is canceled, for display in the web server.

-

--role_administrator

- The command is being issued in the role of a DUCC administrator. If the user is not also a

registered administrator this flag is ignored. (This helps to protect administrators from inadvertently canceling

work they do not own.)

Notes:

None.

3.11 ducc_services

Description:

The ducc_services CLI is used to manage service registration. It has a number of functions as listed below.

The functions include:

-

Register

- This registers a service with the Service Manager by saving a service specification in the Service Manager’s

registration area. The specification is retained by DUCC until it is unregistered.

The registration consists primarily of a service specification, similar to a job specification. This specification

is used when the Service Manager needs to start a service instance. The registered properties for a service are

made available for viewing from the DUCC Web Server’s service details page.

-

Unregister

- This unregisters a service with the Service Manager. When a service is unregistered DUCC stops the

service instance and moves the specification to history.

-

Start

- The start function instructs DUCC to allocate resources for a service and to start it in those resources. The

service remains running until explicitly stopped. DUCC will attempt to keep the service instances running if

they should fail. The start function is also used to increase the number of running service instances if desired.

-

Stop

- The stop function stops some or all service instances.

-

Modify

- The modify function allows most aspects of a registered service to be updated without re-registering the

service. Where feasible the modification takes place immediately; otherwise the service must be stopped and

restarted.

-

Disable

- This prevents additional instances of a service from being spawned. Existing instances are not affected.

-

Enable

- This reverses the effect of a manual disable command or an automatic disable of the service due to excessive

errors.

-

Ignore References

- A reference started service no longer exits after the last work referencing the service exits. It

remains running until a manual stop is performed.

-

Observe References

- A manually started service is made to behave like a reference-started service and will

terminate after the last work referencing the service has exited (plus the configured linger time).

-

Query

- The query function returns detailed information about all known services, both registered and otherwise.

Usage:

-

Script wrapper

- $DUCC_HOME/bin/ducc_services options

-

Java Main

- java -cp $DUCC_HOME/lib/uima-ducc-cli.jar org.apache.uima.ducc.cli.DuccServiceApi options

The ducc_services CLI requires one of the verbs “register”, “unregister”, “start”, “stop”, “query”, or “modify”. Other

arguments are determined by the verb as described below.

Options:

3.11.1 Common Options

These options are common to all of the service verbs:

-

--debug

- Prints internal debugging information, intended for DUCC developers or extended problem

determination.

-

--help

- Prints the usage text to the console.

[

options]]

3.11.2 ducc_services –register [specification file] [options]

The register function submits a service specification to DUCC. DUCC stores this information until it is unregistered. Once

registered, a service may be started, stopped, etc.

The specification file is optional. If designated, it is a Java properties file containing other registration options, minus the

leading “–”. If both a specification file and command-line options are designated, the command-line options override those in

the specification.

The options describing the service include:

-

--autostart [true or false]

- This indicates whether to register the service as an autostarted service. If not

specified, the default is false.

-

--classpath [path-string]

- The CLASSPATH used for the service, if the service is a UIMA-AS services. If not

specified, the CLASSPATH of the process invoking this request is used.

-

--classpath_order [user-before-ducc | ducc-before-user]

- OBSOLETE - ignored.

-

--debug

- Enable debugging messages. This is primarily for debugging DUCC itself.

-

--description [text]

- The text is any quoted string used to describe the job. It is displayed in the Web Server.

Note: When used as a CLI option, the description string must usually be quoted to protect it from the shell.

-

--environment [env vars]

- Blank-delimited list of environment variables and variable assignments for the service. Entries

will be copied from the user’s environment if just the variable name is specified, optionally with a final ’*’ for those

with the same prefix. Example:

--environment TERM=xterm DISPLAY=:1.0 LANG UIMA_*

Additional entries may be copied from the user’s environment based on the setting of

ducc.submit.environment.propagated

in the global DUCC configuration ducc.properties.

Note: When used as a CLI option, the environment string must usually be quoted to protect it from the

shell.

-

--help

- This prints the usage text to the console.

-

--instances [n]

- This specifies the number of instances to start when the service is started. If not specified, the default is 1.

Each instance has the DUCC_SERVICE_INSTANCE environment variable set to a unique sequence number, starting

from 0. If an instabce is restarted it will be assigned the same number.

-

--instance_failures_window [time-in-minutes]

- This specifies the time in minutes that service instance

failures are tracked. If there are more service instance failures within this time period than are allowed by

--instance_failures_limit the service’s autostart flag is set to false and the Service Manager no longer starts instances

for the service. The instance failures may be reset by resetting the autostart flag with the --modify option, or if no

subsequent failures occur within the window.

This option pertains only to failures which occur after the service is initialized.

This value is managed the a services ping/monitor. Thus if it is dynamnically changed with the --modify option it

takes effect immediately.

-

--instance_failures_limit [number of allowable failures]

- This specifies the maximum number of service failures

which may occur with the time specified by --instance_failures_window before the Service Manager disables the

service’s autostart flag. The accounting of failures may be reset by resetting the autostart flag with the --modify

option or if no subsequent failures occur within the time window.

This option pertains only to failures which occur after the service is initialized.

This value is managed the a services ping/monitor. Thus if it is dynamnically changed with the --modify option the

current failure counter is reset and the new value takes effect immediately.

-

--instance_init_failures_limit [number of allowable failures]

- This specifies the number of consecutive

failures allowed while a service is in initialization state. If the maximum is reached, the service’s autostart

flag is turned off. The accounting may be reset by reeenabling autostart, or if a successful initialization

occurs.

-

--jvm [path-to-java]

- This specifies the JVM to use for UIMA-AS services. If not specified, the same JVM used by the

Agents is used.

Note: The path must be the full path the the Java executable (not simply the JAVA_HOME environment variable.).

Example:

--jvm /share/jdk1.6/bin/java

-

--process_jvm_args [list]

- This specifes extra JVM arguments to be provided to the server process for UIMA-AS

services. It is a blank-delimited list of strings. Example:

--process_jvm_args -Xmx100M -Xms50M

Note: When used as a CLI option, the argument string must usually be quoted to protect it from the

shell.

-

--log_directory [path-to-log directory]

- This specifies the path to the directory for the individual service instance logs.

If not specified, the default is $HOME/ducc/logs. Example:

--log_directory /home/bob

Within this directory DUCC creates a subdirectory for each job, using the numerical ID of the job. The format of the

generated log file names as described here.

Note: Note that --log_directory specifies only the path to a directory where logs are to be stored. In order to manage

multiple processes running in multiple machines DUCC, sub-directory and file names are generated by DUCC and may

not be directly specified.

-

--process_descriptor_DD [DD descriptor]

- This specifies the UIMA Deployment Descriptor for UIMA-AS

services.

-

--process_debug [host:port]

- The specifies a debug port that a service instance connects to when it is started. If

specified, only a single service instance is started by the Service Manager regardless of the number of instances

specified. The service instance’s JVM options are enhanced so the service instance starts in debug mode with the

correct call-back host and port. The host and port are used for the callback.

To disable debugging, user the --modify service option to set the host:port to the string “off”.

-

--process_executable [program-name]

- For CUSTOM services, this specifies the full path of the program to

execute.

-

--process_executable_args [list-of-arguments]

- For CUSTOM services, this specifies the program arguments, if

any.

-

--process_memory_size [size]

- This specifies the maximum amount of RAM in GB to be allocated to each Job Process.

This value is used by the Resource Manager to allocate resources.

-

--scheduling_class [classname]

- This specifies the name of the scheuling class the RM will use to determine the resource

allocation for each process. The names of the classes are installation dependent. If not specified, the FIXED_SHARE

default is taken from the site class definitions file described here.

-

--service_dependency[list]

- This specifies a blank-delimited list of services the job processes are dependent upon. Service

dependencies are discussed in detail here. Example:

--service_dependency UIMA-AS:Service1:tcp:node682:61616 UIMA-AS:OtherSvc:tcp:node123:123

Note: When used as a CLI option, the list must usually be quoted to protect it from the shell.

-

--service_linger [milliseconds]

- This is the time in milliseconds to wait after the last referring job or service exits before

stopping a non-autostarted service.

-

--service_ping_arguments [argument-string]

- This is any arbitrary string that is passed to the init() method of the

service pinger. The contents of the string is entirely a function of the specific service. If not specified, a null is passed

in.

Note: When used as a CLI option, the string must usually be quoted to protect it from the shell, if it contains

blanks.

The build-in default UIMA-AS pinger supports an argument string of the following form (with NO embedded

blanks):

service_ping_arguments=broker-jmx-port=pppp,meta-timeout=tttt

The keywords in the string have the following meaning:

-

broker-jmx-port=pppp

- This is the JMX port for the service’s broker. If not specified, the default of 1099

is used. This is used to gather ActiveMQ statistics for the service.

Sometimes it is necessary to disable the gathering of ActiveMQ statistics through JMX; for example, if

the queue is accessed via HTTP instead of TCP. To disable JMX statistics, specify the port as

“none”.

service_ping_arguments=broker-jmx-port=none

-

meta-timeout=tttt

- This is the time, in milliseconds, to wait for a response to UIMA-AS get-meta. If not specified,

the default is 5000 milliseconds.

-

--service_ping_class [classname]

- This is the Java class used to ping a service.

This parameter is required for CUSTOM services.

This parameter may be specified for UIMA-AS services; however, DUCC supplies a default pinger for UIMA-AS

services.

-

--service_ping_classpath [classpath]

- If service_ping_class is specified, this is the classpath containing

service_custom_ping class and dependencies. If not specified, the Agent’s classpath is used (which will generally be

incorrect.)

-

--service_ping_dolog [true or false]

- If specified, write pinger stdout and stderr messages to a log, else suppress the log.

See Service Pingers for details.

-

--service_ping_jvm_args [string]

- If service_ping_class is specified, these are the arguments to pass to jvm when

running the pinger. The arguments are specified as a blank-delimited list of strings. Example:

--service_ping_jvm_args -Xmx400M -Xms100M

Note: When used as a CLI option, the arguments must usually be quoted to protect them from the

shell.

-

--service_ping_timeout [time-in-ms]

- This is the time in milliseconds to wait for a ping to the service. If the timer

expires without a response the ping is “failed”. After a certain number of consecutive failed pings, the service is

considered “down.” See Service Pingers for more details.

-

--service_request_endpoint [string]

- This specifies the expected service id.

This string is optional for UIMA-AS services; if specified, however, it must be of the form UIMA-AS:queue:broker-url,

and both the queue and broker must match those specified in the service DD specifier.

If the service is CUSTOM, the endpoint is required, and must be of the form CUSTOM:string where the contents of the

string are determined by the service.

-

--working_directory [directory-name]

- This specifies the working directory to be set for the service processes. If not

specified, the current directory is used.

3.11.3 ducc_services –start options

The start function instructs DUCC to allocate resources for a service and to start it in those resources. The service remains

running until explicitly stopped. DUCC will attempt to keep the service instances running if they should fail. The start

function is also used to increase the number of running service instances if desired.

-

--start [service-id or endpoint]

- This indicates that a service is to be started. The service id is either the numeric ID

assigned by DUCC when the service is registered, or the service endpoint string. Example:

ducc_services --start 23

ducc_services --start UIMA-AS:Service23:tcp://bob.com:12345

-

--instances [integer]

- This is the number of instances to start. If omitted, sufficient instances to match the registered

number are started. If more than the registered number of instances is running this command has no

effect.

If the number of instances is specified, the number is added to the currently number of running instances. Thus if five

instances are running and

ducc_services --start 33 --instances 5

is issued, five more service instances are started for service 33 for a total of ten, regardless of the number specified in

the registration.

ducc_services --start 23 --intances 5

ducc_services --start UIMA-AS:Service23:tcp://bob.com:12345 --instances 3

3.11.4 ducc_services –stop options

The stop function instructs DUCC to stop some number of service instances. If no specific number is specified, all instances

are stopped.

-

--stop [service-id or endpoint]

- This specifies the service to be stopped. The service id is either the numeric ID assigned

by DUCC when the service is registered, or the service endpoint string. Example:

ducc_services --stop 23

ducc_services --stop UIMA-AS:Service23:tcp://bob.com:12345

-

--instances [integer]

- This is the number of instances to stop. If omitted, all instances for the service are stopped. If the

number of instances is specified, then only the specified number of instances are stopped. Thus if ten instances are

running for a service with numeric id 33 and

ducc_services --stop 33 --instances 5

is issued, five (randomly selected) service instances are stopped for service 33, leaving five running. The

registered number of instances is never reduced to zero even if the number of running instances is reduced to

zero.

Example:

ducc_services --stop 23 --intances 5

ducc_services --stop UIMA-AS:Service23:tcp://bob.com:12345 --instances 3

3.11.5 ducc_services –enable options

The enable function removes the disabled flag and allows a service to resume spawning new instances according to its

management policy.

-

--enable [service-id or endpoint]

- Removes the disabled status, if any. Example:

ducc_services --enable 23

ducc_services --enable UIMA-AS:Service23:tcp://bob.com:12345

3.11.6 ducc_services –disable options

The disable function prevents the service from starting new instances. Existing instances are not affected. Use the

ducc_services –enable command to reset.

-

--disable [service-id or endpoint]

- sets the disabled status. Example:

ducc_services --disable 23

ducc_services --disable UIMA-AS:Service23:tcp://bob.com:12345

3.11.7 ducc_services –observe_references options

If the service is not autostarted and has active instances, this instructs the Service Manager to track references

to the service, and when the last referencing service exits, stop all instances. The registered linger time is

observed after the last reference exits before stopping the service. See the management policy section for more

information.

-

--observe_references [service-id or endpoint]

- Instructs the SM to manage the service as a reference-started service.

Example:

ducc_services --observe_references 23

ducc_services --observe_references UIMA-AS:Service23:tcp://bob.com:12345

3.11.8 ducc_services –ignore_references options

If the service is manually started and has active instances, this instructs the Service Manager to NOT stop the service when

the last referencing job has exited. It transforms a manually-started service into a reference-started service. See the

management policy section for more information.

-

--ignore_references [service-id or endpoint]

- Instructs the SM to manage the service as a reference-started service.

Example:

ducc_services --igmore_references 23

ducc_services --ignore_references UIMA-AS:Service23:tcp://bob.com:12345

3.11.9 ducc_services –modify options

The modify function dynamically updates some of the attributes of a registered service. All service options as

described under --register other than the service_endpoint and process_descriptor_DD may be modified wihtout

re-registering the service. In most cases the service will need to be stopped and restarted for the update to

apply.

The modify option is of the following form:

-

--modify [service-id or endpoint]

- This identifies the service to modify. The service id is either the numeric ID assigned

by DUCC when the service is registered, or the service endpoint string. Example:

ducc_services --modify 23 --instances 3

ducc_services --modify UIMA-AS:Service23:tcp://bob.com:12345 --intances 2

The following modifications take place immediately without the need to restart the service:

- instances

- autostart

- service_linger

- process_debug

- instance_init_failures_limit

Modifying the following registration options causes the service pinger to be stopped and started, without affecting any of the

service instances themselves. The pinger is restarted even if the modification value is the same as the old value. (A good way

to restart a possibly errant pinger is to modify it’s service_ping_dolog from “true” to “true” or from “false” to

“false”.)

- service_ping_arguments

- service_ping_class

- service_ping_classpath

- service_ping_jvmargs

- service_ping_timeout

- service_ping_dolog

3.11.10 ducc_services –query options

The query function returns details about all known services of all types and classes, including the DUCC ids of the service

instances (for submitted and registered services), the DUCC ids of the jobs using each service, and a summary of each

service’s queue and performance statistics, when available.

All information returned by ducc_services --query is also available via the Services Page of the Web Server as well as the

DUCC Service API (see the JavaDoc).

-

--query [service-id or endpoint]

- This indicates that a service is to be stopped. The service id is either the

numeric ID assigned by DUCC when the service is registered, or the service endpoint string.

If no id is given, information about all services is returned.

Below is a sample service query.

The service with endpoint UIMA-AS:FixedSleepAE_5:tcp://bobmach:61617 is a registered service, whose

registered numeric id is 2. It was registered by bob for two instances and no autostart. Since it is not autostarted,

it will be terminated when it is no longer used. It will linger for 5 seconds after the last referencing job completes,

in case a subsequent job that uses it enters the system (not a realistic linger time!). It has two active instances

whose DUCC Ids are 9 and 5. It is currently used (referenced) by DUCC jobs 1 and 5.

Service: UIMA-AS:FixedSleepAE_5:tcp://bobmach291:61617

Service Class : Registered as ID 2 Owner[bob] instances[2] linger[5000]

Implementors : 9 8

References : 1 5

Dependencies : none

Service State : Available

Ping Active : true

Autostart : false

Manual Stop : false

Queue Statistics:

Consum Prod Qsize minNQ maxNQ expCnt inFlgt DQ NQ Disp

52 44 0 0 3 0 0 402 402 402

Notes:

3.12 viaducc and java_viaducc

Description:

Viaducc is a small script wrapper around the ducc_process_submit CLI to facilitate launching processes on DUCC-managed

machines, either from the command line or from an Eclipse run configuration.

When run from the command line as “viaducc”, the arguments are bundled into the form expected by ducc_process_submit

and submitted to DUCC. By default the remote stdin and stdout of the deployed process are mapped back to the command

line terminal.